Requirements-first Development with ChatGPT

How ChatGPT changes my approach to programming

How does ChatGPT change programming? The answer is simple: In many ways — we cannot even fathom yet the changes it will bring. It’s an amazing tool still in its infancy.

But let’s not turn into Mowglis hyponotized and strangled by an AI Ka. We should not freeze and wait that this storm will pass. Because it won’t.

Better to explore the tool and integrate it into our projects wherever it seems helpful already. The earlier the better. No need to wait.

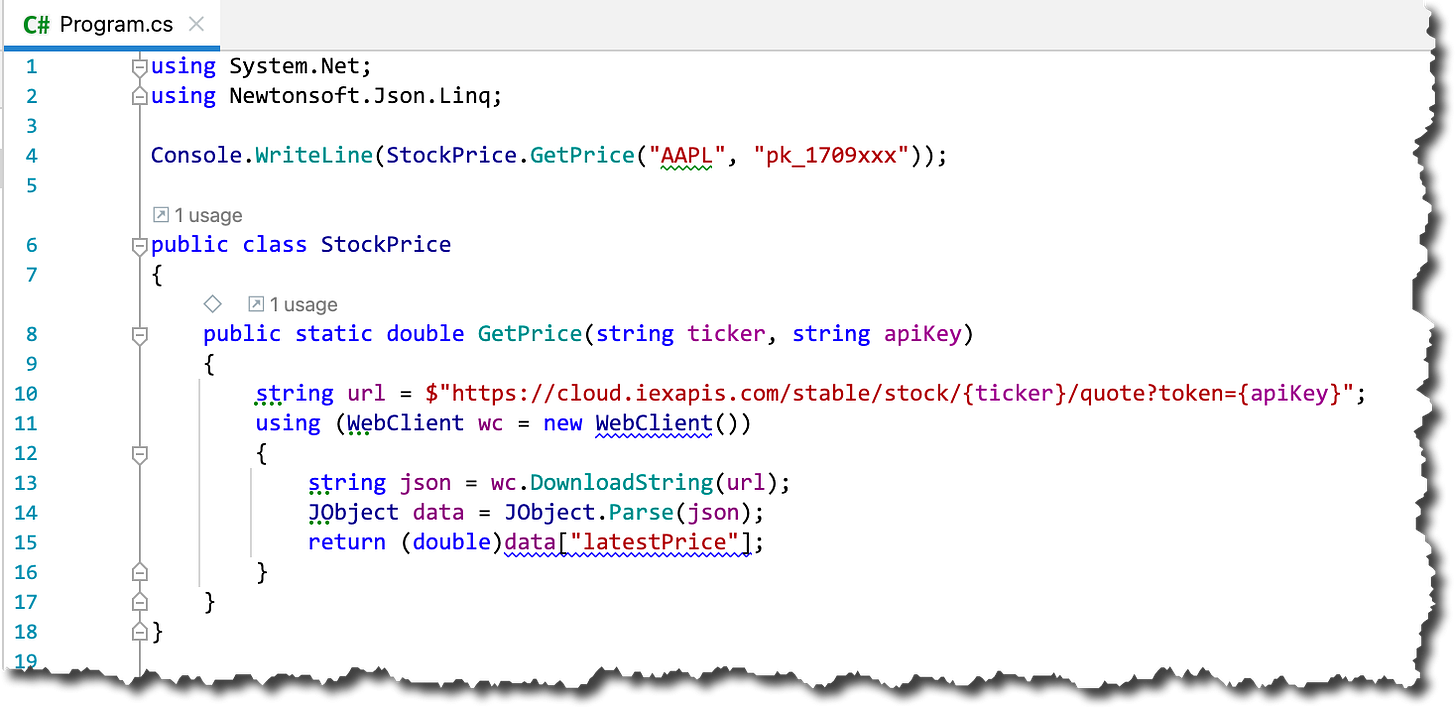

There are loads of examples already of how ChatGPT is writing code to solve certain problems. Here’s just a tiny example I tried this minute: getting the price of a stock.

This code was working just fine once put into a C# project. I only needed to get an API key from IEX Cloud.

All in all it was a matter of about 3 minutes from question to the first stock price printed to my console. Including getting the API key which took the most time.

I clearly remember how much, much longer and more tedious this was just half a year ago when I tried the same scenario while working on ways to improve handling my finances.

So, that kind of help is great. One of the problems of modern software development is the exploding number of APIs you need to know to access all kinds of resources. To me that’s a pain. I want to focus on solving a business problem not drown in API details.

Production code logic is put into a black box

However, I think these kinds of feats are pointing in the wrong direction. It’s “just” intellisense 10 levels up. It’s faster horse carriages — not cars. Because this way we’re still stuck with, well, code. Or to be more precise: logic in production code.

As long as that’s the case people will complain about such code not being professional, e.g. not using this kind of language construct or that kind of framework or not being perfectly clean.

I find this misleading. Because it’s fundamentally misunderstanding what ChatGPT is doing: writing and also changing code for you. ChatGPT has to carry the burden of understanding its code, not you. Once ChatGPT is employed for writing and changing code, you need not be concerned anymore with how it’s looking. No need to read it anymore. Just lean back and enjoy the result.

This is hard to imagine as long as ChatGPT is still producing source code. I understand that. But we should try to abstract from that. Forget about production code. Forget about how a problem is solved. That’s none of your business once you start asking ChatGPT for a solution.

A developer complained to me about ChatGPT writing code only like a junior programmer. Why’s that a problem? How does that affect the solution? Does the customer suffer from junior programmer code because it’s not functional or too slow? Or is that a complaint which only makes sense if you’re a fellow programmer who later on has to deal with the junior programmer’s code? I guess the latter is the case.

I understand this sentiment very well — but it’s misguided. Because rather sooner than later the majority of code can be written by ChatGPT — and then nobody should even be looking at it. So, why care if it’s like written by a junior programmer? ChatGPT doesn’t even need to produce C# or Java of C++ code. It can generate executable code right away.

Production code logic is essentially put into a black box by ChatGPT (or should be). Developers should not open it. Just take it as is. That’s the point of black boxes: they are hiding details to make you more efficient. Your thinking can move to a higher level. Leave the details not only to the compiler, but to ChatGPT.

If that’s a prospect making you feel uneasy you’re missing a piece of the puzzle: requirements and tests.

Requirements-first development

With ChatGPT all software development necessarily will become test-first development. Because it’s the tests as coded requirements plus natural language requirements instructing ChatGPT what to produce.

ChatGPT is creative. ChatGPT understands. At least it seems like that. At least it’s simulating actual understanding on such a high level that I couldn’t care less if it actually is understanding. I can have fruitful conversations with ChatGPT about code. That’s all I want.

And that’s changing everything!

If ChatGPT actually is understanding and responding with appropriate code… then I can focus on stating the requirements.

I can describe requirements in natural language and ChatGPT understands. Sometimes that’s the easiest way to ask for an implementation. However, a lot of care must be taken to be precise.

I can describe requirements in formal language, even as tests and ChatGPT understands.

One way or the other this means, requirements are first, and production code finally and truly is second. With ChatGPT a shift is happening:

If you want to use ChatGPT’s powers, you really have to be crystal clear about what your requirements are.

No more hand-waving. No more shrugging and ignoring. You will get what you want if you can spell out what you want. If you can’t, well, you don’t get it. That simple. Finally.

ChatGPT has a super power to rein in customers and product owners: It’s the willingness to say No! to to lack of clarity. It just doesn’t try to compensate for it by itself. It thus functions as a mirror: If you don’t like what it produces… well, that’s probably on you. Garbage in, garbage out.

Modularization is inevitable

If software development with ChatGPT is requirements-first, that means it’s test-first, that means, it’s interfaces first. In essence it leads to Interface-Driven Development (IDD).

To apply tests there needs to be at least one interface. Since user interfaces are hard to test, it needs to be a programming interface, an API right below the user interface surface. I call it the body API (see the Sleepy Hollow Architecture for an explanation what I mean by body).

Tests to check the body API are acceptance tests. They are very high level and only show if all the parts are actually working together. They are integration tests by nature. No detailed tests of single features and edge cases are feasible that far out from the core of an application. Nevertheless they are serving an important purpose: to show that there is a functioning whole.

To be sure all the details have been implemented correctly, more detailed tests are needed. They are like probes to be inserted into the body. But what to attached them to? Modules.

The whole needs to be made up of discernible parts with clear cut purpose of their own. Lego blocks, if you will. These parts are implemented as modules, i.e. black boxes with an interface (API) defining their services.

Developers are not alien to that concept. In fact they have been struggling for “the right way to modularize” code for a long time. But what is “the right way”? Several forces were at play:

a module was supposed to be resuable

a module was supposed to be easy to understand and change

a module was supposed to be easy to test

In my view two of these forces are not relevant anymore with ChatGPT.

There is no need anymore to understand modules, or: module details. The How? of a module becomes irrelevant. We don’t want to care anymore how ChatCPT achieves the goal of satisfying all module tests.

There is no need anymore to strive for resuability. Because any code can be generated precisely for its usage scenarios. No need to save on developer time. No need to strive for DRY. Whether a number of logic statements occurs once or a thousand times in ChatGPT generated code is of no consequence to any human.

The only reason for a module to exist is whether it makes it possible to test requirements for the purpose of directing ChatGPT in its implementation.

And while I’m at destroying a dearly held conviction or two let me say: Clean code development is pretty irrelevant for new code. Because new code won’t be read by humans anymore. It’s just the tests and the interfaces to be tested they will be concerned with.1

What’s required in the future are skills to formulate and formalize requirements — and to design a module structure that makes if straightforward to apply those requirements so ChatGPT can generate code.

An inevitable Hello-World example

A new programming language is always introduced with a Hello-World application. So let me show you what I mean by the above with a simple example.

It won’t be about smart API usage or intricate algorithms. Its focus is solely on the approach I’ve described.

Here’s what an imagined customer wants:

A program that can be run times at consecutive parties. Each party guest registers with the program by typing his/her name and then is personally greeted with a message. The exact message text depends on how often the guest has visited parties. Example:

$ hello_app

Please enter your name: Peter

Hello, Peter!

Please enter your name: Paul

Welcome back, Paul!

…

Paul sees a different message than Peter because Paul is a recurring guest while Peter appears at these parties for the first time.

Modularization

The program is very small. No modules are needed. Nevertheless I want to define some to show you, what I mean by requirements-first targeted at modules.

I envision at least two modules:

persisting the guest registrations

generating the greeting messages

Both modules will be integrated by the main program which is doing all the interaction with the guests. That’s hard to test anyway so I don’t extract a module for that.

Please note, that I as the designer of the structure am not only deciding which modules there will be, but also how they should depend on each other. Following the IOSP my decision is that both modules don’t know each other.

Module implementation

With this overall structural concept of the application I can start asking ChatGPT for implementations. Here’s my request for the first module:

I don’t have a clue about the implementation ChatGPT will come up with. I’m just concerned about it’s services. So I’m telling ChatGPT the API and add, that the data needs to be persisted. Would I not tell ChatGPT that it would go for an in-memory solution.

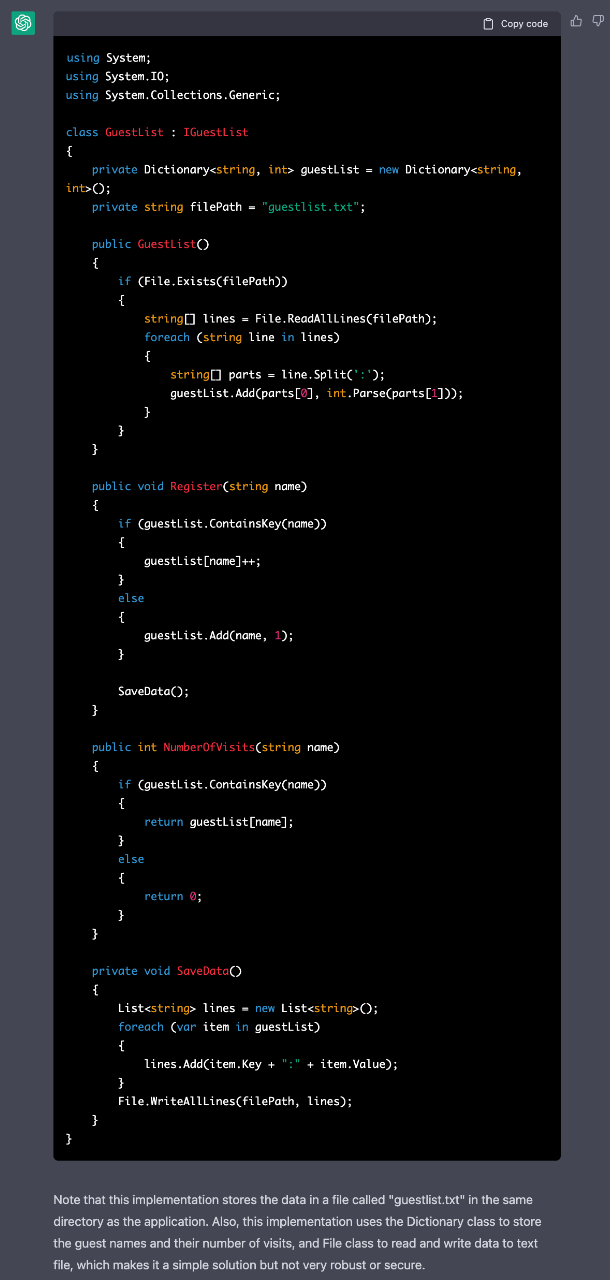

What’s ChatGPT’s response to this request? A class implementing the interface as stated. And logic for all the interface methods. You don’t really need to see any details.

By the way: Never mind the primitive and even wrong test cases I provided. ChatGPT understood them just fine. It did not mind that Assert() is not a function on the Console{} class, but the Debug{} class.

I know, it’s hard for you to not see the generated code. So here it is:

It’s conforming to the requirements. After I set up a C# project in my Rider IDE and pasted it in it ran just fine:

What it showed me, though, were some flaws in my test! I had to fix two bugs on my side.

Next is the message generator. I specified it like before: interface plus test cases.

However, I felt the test cases weren’t enough. Hot to tell ChatGPT that “Great to see you” was supposed to be the message from 5 visits on? Instead of making the test cases more complex or just add more, I resorted to natural language. And that worked just fine.

This implementation delivered green tests right off the bat.

Module integration

ChatGPT keeps all it’s done in a chat session “in its mind”. Hence I can ask it to use previously mentioned or generated code in code it generates. The requirements for the missing integrating code interacting with the user thus are easily described:

No tests needed here. User interaction is hard to test automatically.

Please note ChatGPTs explanation why it used an infinite loop. Does that sound like reasoning, deduction? To me it does. Nowhere is this requested explicitly.

Some manual work was necessary to put all the pieces together.

But that’s trivial compared to coding all the pieces myself.

After having done that the program is running. Yes, it’s running out of the box. With the safety net of the automated tests. What I get is what I specified. Not more, not less.

How cool is that?

But alas… there is a glitch. Something wasn’t specified as clearly as needed. There was leeway for ChatGPT to make a decision. And what it decided was that the name registration happens at the end of the loop. The behavior of the program deviated from my expectation so I started looking.

I stepped into the trap of not defining a body API and not providing tests. I was too sure all was fine with just module tests. And it worked — in principle. The integration is formally correct. Only a small misplacement of one function call.

Since I see the code for the program I can spot the misplacement. But I don’t want to interfere with ChatGPT. So I’m asking it to change the code.2

Of course ChatGPT complies diligently:

Now the program is working how the customer envisioned it! Without any production logic being implemented by a human.

Refactoring

I don’t need to be concerned with the code for the program. But since missing tests were causing a problem, I think some refactoring is warranted. I want to make it testable on this level.

For that I first ask ChatGPT to extract the logic into yet another module:

And then the tricky interaction logic is extracted to be able to replace it in a test, if I want to:

Please note how ChatGPT identified which parts of the existing code should go into which of the interface functions.

To round off the project I asked ChatGPT for a visualization of the application structure. It cannot generate images itself, but at least a textual representation was provided.

When I put this description into a Mermaid editor the output looked like expected.

A great documentation of which modules exist and can be used in future iterations.

Summary

I’m really impressed by ChatGPT. The conversation with it felt very natural. It understood my requirements in natural language as well as formal language. It produced perfect code — and showed me, where my requirements where lacking in clarity. I was to blame, not ChatGPT.

Sure, this might not be the result in all cases. Yet. But it’s getting there. I’m sure of that. Whoever today is a cheap code monkey will be out of a job soon.

And the same may be true for people building abstractions. ChatGPT pretty much does not care about abstractions. Abstractions are for humans who need to understand code already written and have limited time for new code. Not so ChatGPT.

What’s needed are ways to describe requirements more easily, more clearly, more comprehensively right from the start. And that’s not a small challenge, I guess.

Even though it’s still early in the development of AI like ChatGPT I think that much should be clear: the days for developers fiddling with bits and specializing in knowing arcane APIs are numbered. This will be frustrating for many — but it will be a boost for delivering even more powerful software more quickly.

My recommendation for today: get your hands on ChatGPT! Be relentless in finding ways to get yourself onto the next level.

Assembler programming is not taught anymore. Just 40 years ago, though, that was still standard. Structured programming and object-oriented programming and even functional programming will suffer the same fate — possibly earlier than 40 years from now.

Sure, this will not happened overnight. And there is a lot of old code still under maintenance by humans only. And for some stuff ChatGPT won’t come up with good enough solutions. But the amount of code where “clean” is important, will shrink enormously.

I now realize that my request is referring to the code structure. That’s not good. I should have described just the wrong behavior as seen from the outside.