Event Sourcing first: That’s the future of software development, I think.

Yes, don’t start a software project by asking yourself “Should I use a relational database or is a NoSql data store better suited?” Rather take an event store as a given for recording all the facts about your application’s state. Don’t waste any time on designing comprehensive database schemas of any kind.

I feel old enough to make such a bold statement.

After many, many years I have come to the conclusion — slowly — that the predominant persistence paradigm is “not the real thing”.

It’s obvious, it’s accepted to the level of being completely natural and without alternative. It’s mainstream in all possible ways. It’s like water to fish: almost invisible to the developer’s mind.

But it’s also a kind of Matrix: pulling a veil over the eyes of developers and keeping them in bondage. In bondage, because under the invisible rule of CRUD, developers sweat and toil. They spend uncountable hours trying to work around the constraints of the paradigm.

CRUD: Data storage in the dark age

CRUD to me is the paradigm underlying relational database, document stores as well as object-orientation. Since 1983 CRUD stands for:

Create,

read,

update,

delete

data in place.

CRUD is rooted in the von-Neumann computer architecture with its addressable memory for storing data and instructions.

An addressable memory consists of same sized “memory cells” which can be filled with data and from which the data then can be read again. Filling a data cell is an update operation (U) of the form update(<address>, <data>), retrieving data is a read operation (R) of the form read(<address>):<data>.

The number of memory cells is fixed. Care has to be taken to use them wisely. Memory was scarce back then in the 1940s and even until recently.

Whether this memory is in RAM or on a hard disk, is not important. The only “physical” operations are U(pdate) (write) and R(ead).

C(reate) and D(elete) in contrast are not “physical”. They are logical operations on the physical storage with regards to “higher level” data structures. If you want to store an integer (of fixed length), a string (of variable length), or the data about a business contact consisting of several strings (and possibly other primitive data types), sections of the memory have to be allocated (reserved). That’s what C(reate) is about.

First an area in memory is delimited (reserved) for the data structure, then the memory cells are filled with data. The operation on top of the physical storage is create(<size>):<address>. Actually storing data thus initially is a two step process:

const address = create(13)

update(address, "Hello, World!")After that the data can be read at any time by providing the address:1

const s = read(address, 13)Finally, if the data is not needed anymore, the reserved area for it can be freed. That’s the D in CRUD: delete(address).

const dobAddress = create(10)

update(dobAddress, "1972-05-07")

...

let dob = read(dobAddress)

...

update(dobAddress, "1972-05-08")

...

delete(dobAddress)There you have it: data management with CRUD. CRUD is all you need, you could say. CRUD is what the programming language C offered. CRUD is what a file system is offering (with file paths as addresses). CRUD is what a relational database is about (which inspired the acronym in the first place).

It’s a CRUD world.

It seems.

But CRUD is limiting because it’s a child of scarcity.

As long as software development is shackling itself to CRUD it’s bound to stay… medieval, I’d say.

Yes, medieval, because back then all people could build was based on an understanding of physics as later expressed by Galileo and Newton. Engineering was mechanical. That was all there was for machines and buildings.

But how far has humanity come since then! Things started to change in the 18th century and further accelerated in the 19th. Electromagnetism was discovered. Electrical engineering evolved. And later relativistic physics and quantum physics. Both changed our worldview and societies and our living conditions fundamentally (for better or worse).

And software development has to go through such a revolution, too, I think.

In 2025 it’s still primarily relying on an 80 year old storage concept. It does not matter if you use an RDBMS, a NoSql database with JSON documents or a key-value database. The basic access paradigm is… CRUD.

Because CRUD seems so natural and obvious and all there is, like the mechanical laws of physics or that the sun seems to be revolving around the earth.

This is not totally wrong. Thinking along these lines delivers results. For example, people thousands of years ago were able to erect huge buildings and cross oceans without knowing the fuller picture of physics that we have today.

But why stop there?

As they say in software development: the solution to all problems is another indirection (or another layer of abstraction). And there are a couple of problems that still are awaiting a solution. Not the least of them all: the seemingly inevitable decline in productivity over the course of a project despite a code base growing richer with every increment.

AQ: The future of data storage

In the beginning there was only U(pdate) and R(ead). The basic operations of addressable memory of fixed size cells.

Then C(reate) and D(elete) put a layer of abstraction on top of that and allowed update and read of variable size memory areas. This made modern languages with stacks and heaps and all the mainstream database technologies possible.

But now it’s time to add yet another level of abstraction on top. Our storage paradigm should mirror the development of our hardware. We’ve left the age of scarcity behind! There is an abundance of processing power and storage. Well, at least for all practical purposes for most projects. We should thus break the conceptual chains of CRUD.

Hardware is not the limiting factor anymore. It’s rigidity of our solutions, the code. It cannot be adapted fast enough to ever changing requirements.

What’s keeping us back is the unquestioned assumption that we still need to replace data in a fixed amount of memory. We fear we don’t have enough memory and thus need to constantly overwrite it, and delete content when no longer needed.

This CRUD-born thinking leads to large schemas for data structures to hold only a single version of application state. Only one version of application state must ever be kept in a store: the latest.

But this is not how the world operates. In the real world everything is in constant flux. What we perceive as statically shaped objects is in perpetual change. A single shape, a static object in a certain state is an illusion. It’s a man made abstraction, a good enough working model to survive. But it’s far from reality.

We sure should take these abstractions seriously; not looking left and right before crossing a street can cost your life. But we should not take them literally, i.e. think they are “what’s really out there”. They are nothing more than models like a file icon on your Windows desktop and the trash can icon are models. Read “The Case Against Reality” by Donald D. Hoffman for a comprehensive discussion of this.

How does that relate to CRUD? With CRUD we’re avoiding the distinction. We are trying to take models for reality. We insist that we must persist our abstractions one-to-one in order for software to function.

This might have been inevitable for different reasons for a long time — but not anymore. Today we should look through this illusion. If we don’t, we’re delusional, mad, and deserve to continue to suffer from our misunderstanding.

The world does not consist of “things”. We are abstracting and constructing them for our convenience (aka survival). Neither does application data consist of “things”; we thus should not make it a core activity in software development to design object models or other big data models.

Please understand me correctly: I am not against object models. Classes and objects are great concepts. But they should not be primary or taken “for real”.

Objects are not discovered in requirements! It’s futile and a waste of time trying to hunt them down, sniff them out, uncover them in whatever a domain expert is telling you. When Eric Evans came up with Bounded Contexts and the Ubiquitous Language he had the right hunch. He expressed his insight that what looks like a single “thing” might in fact be multiple “things” (or seemingly multiple “things” in fact being the same). And this should be recognized by differentiating them explicitly into different contexts and defining crystal clear terms for them.

Unfortunately he did not go further. He did not transcend the notion of “thing”. Because in the end there are no “things” at all. What domain experts are talking about are facts. Facts as changing configurations of values. Sometimes they are more stable, sometimes not. Some of them are more closely related than others.

Domain experts are no developers. For them things are often less clear cut than developers want them to be. Things now without quotes because I am not referring to “things” aka objects, but to the more general meaning of “what’s going on”.

“What’s going on” is key here! Domain experts are experts in change, in processes, in transformations. There are things happening all the time. They want to make more things happen by using software.

CRUD is fundamentally different. CRUD deals with static schemas, fixed delineations. For the domain expert, though, things are not that fixed.

That’s why I am advocating for overcoming CRUD. It’s a matter of truthfulness. We need a storage paradigm that is better aligned with reality.

There is no U(pdate) or D(elete) in reality. There is only ever new, new, new, new… Something happens, and then something else happens, and then something else and so on. The situation changes all the time. Fundamentally nothing is created or vanishes. Remember the law of conservation of energy?

This reality, I think, we need to mirror in software. That way we’d align domain expert view’s more with code. If domain experts don’t think in terms of “things” (objects), who are we to dumb down their view and limit ourselves by forcefully extracting objects and other comprehensive (persistent) data structures from their use cases?

Instead we should embrace the continuous flow of events — situational associations of data — happening in a domain. First and foremost we should become faithful recorders of “what’s going on”.

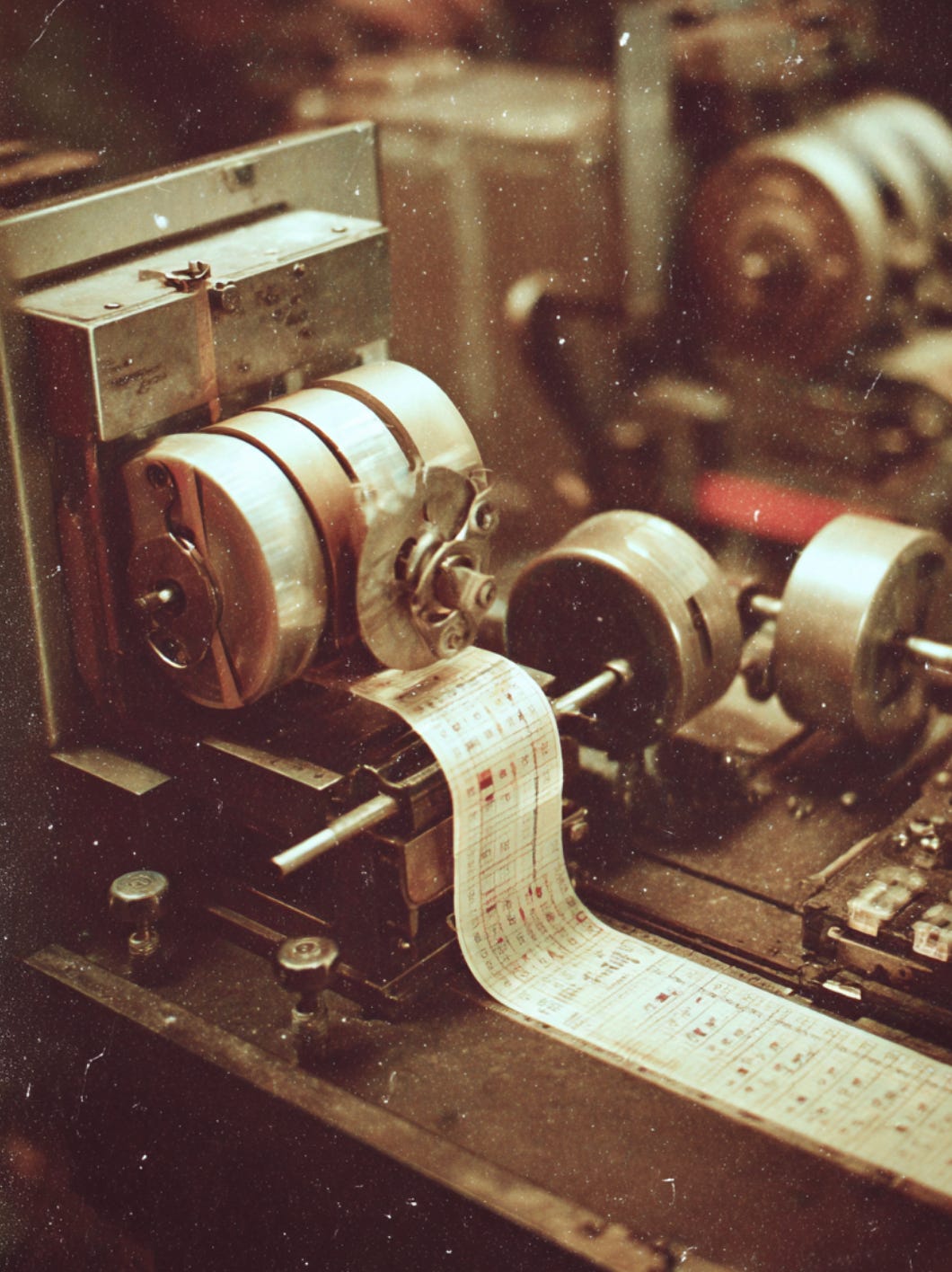

For that our understanding of memory should switch from “limited area of cells” to “limitless stream of events”.

Our core operation is not U(pdate, but A(ppend: append(<why>, <what>).

Append instead of update changes everything!

If application state can only be changed by appending to it, then what’s appended is never comprehensive like a whole object model with some new state. No, it’s just meaningful differences in state of a part of the whole of all application data. And this whole is all that’s factual today and was in the past. It’s a never forgetting log of all application processes.

What part of the state changed? What are the new values?

Why did that change occur? What happened?

We cannot do with just a change in data, e.g.

append({name: "Peter", dob: 1987-08-26})

append({name: "Peter", dob: 1988-08-26})There is now a name associated with a birthdate. Great. But why? And another name/birthdate pair. What does that mean?

We also need to record why these changes happened. What was the decision behind it?

With an explicit reason for a recorded difference in state the overall picture can be very different.

append("contactAdded", {name: "Peter", dob: 1987-08-26})

append("contactAdded", {name: "Peter", dob: 1988-08-26})Here the application state now contains two different contacts even though they are sharing the same name. But with the following events there is only a single contact:

append("contactAdded", {name: "Peter", dob: 1987-08-26})

append("birthdayCorrected", {name: "Peter", dob: 1988-08-26})That’s what Event Sourcing is about: logging all meaningful state changes in an ever expanding stream of events. An event store is limitless append-only memory. Nothing is ever updated or deleted. Whatever change happens to an application’s state has to be expressed as a difference to the previous state described by data (payload) “with a label” (event type) for the reason behind the change.

But of course what goes in, must come out. What would be the point otherwise? So there is a second operation on our future storage: Q(uery, query(<filter criteria>):<events>.

An event stream can be queried for events of a certain type and with a certain payload. Code does not just add new events, it also needs to make decisions based on the application state aka the events appended so far.

Trivial example: Before the birthday of a contact can be changed, an application might want to check if the contact actually exists. Or the application wants to display a list of existing contacts. In both cases it would want to query the event stream for relevant events, e.g. “contactAdded” and “contactRemoved”.

append("contactAdded", {name: "Peter", dob: 1987-08-26})

append("contactAdded", {name: "Paul", dob: 1992-03-12})

append("contactRemoved", {name: "Peter"})

append("contactAdded", {name: "Mary", dob: 1959-11-10})With these events the list of contacts displayed to a user would only consist of two names: [“Paul”, “Mary”]. This would be a situational relevant model, albeit a small one.

With the Q(uery operation applications are free to construct whatever models they want from the raw event stream. They don’t have to rely on one size model to fit all purposes. Instead a multitude of models can coexist in an application, each specialized for a very limited purpose.

This promises to relieve developers greatly. They don’t have to compromise on a single schema to serve all kinds of different, maybe even conflicting needs in different parts of an application.

The shared data reality underlying all of the code is the event stream with its comparatively tiny data snippets (events). This is a granulate from which all kinds of abstractions of the hard data reality can be formed: be that just a single number or a wide network of objects.

Everything is possible all the time.

The event stream is pure potential. It’s like the quantum field out of which elementary particles materialize which seem to form our “real world objects”.

We only need Append and Query or AQ to realize this potential.

That’s why I am claiming, Event Sourcing is the future of software development. It’s the simplicity that’s so alluring and promising. And it’s the truth in it: finally model and reality are distinguished.

Reality is the stream of events. That’s the facts of what happened in/to an application.

And models are consciously constructed abstractions of that on demand and even just in time in a multitude of shapes and sizes.

AQ over CRUD!

What a beautiful paradigm!

Why only the address and not also the size? Because I am assuming an association happening internally of address and size upon creation of a “data area”. C(reate) and D(elete) are operations on top of the physical memory which can provide a little abstraction. Whereas the physical memory consist of equally sized cells, the logical memory spanned by C and D (and matching R and U) consists of differently sized areas.

Beautifully written, Ralf! 😀

I just have to add that - with our arrogance as software developers - „we“ have educated domain experts to think in „things“ … and change their role to be „product owners“. 😉

How does reporting work? All report engines I know about expect a database. Would you have some scheduled job or event listener that lifts event data into a read-only database schema? So this reporting schema would be a secondary data store for the purpose of supporting reports, and not the real primary data store?